Interview :: SEO

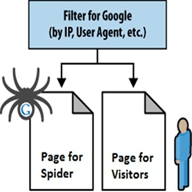

Cloaking is a black hat SEO technique that enables you to create two different pages. One page is designed for the users, and the other is created for the search engine crawlers. It is used to present different information to the user than what is presented to the crawlers. Cloaking is against the guidelines of Google, as it provides users with different information than they expected. So, it should not be used to improve the SEO of your site.

Some Examples of Cloaking:

- Presenting a page of HTML text to search engines and showing a page of images or flash to visitors

- Including text or keywords into a page only when it is requested by the search engine, not by a human visitor

HTML is a case-insensitive language because uppercase or lowercase does not matter in this language and you can write your code in any case. However, HTML coding is generally written in lower case.

SEO: It is a process of increasing the online visibility, organic (free) traffic or visitors to a website. It is all about optimizing your website to achieve higher rankings in the search result pages. It is a part of SEM, and it gives you only the organic traffic.

Two types:

- On-Page SEO: It deals with the optimization of a website for maximum visibility in the search engines.

- Off-Page SEO: It deals with gaining natural backlinks from other websites.

SEM: It stands for search engine marketing. It involves purchasing space on the search engine result page. It goes beyond SEO and involves various methods that can get you more traffic like PPC advertising. It is a part of your overall internet marketing strategy. The traffic generated through SEM is considered the most important as it is targeted.

SEM includes both SEO and paid search. It generally makes use of paid searches such as pay per click (PPC) listings and advertisements. Search ad networks generally follow pay-per-click (PPC) payment structure. It means you only pay when a visitor clicks on your advertisement.

- Google webmaster tools: Google webmaster tool is a free SEO tool offered by Google. It is a set of SEO tools that allow you to control your website. It informs you if anything goes wrong with your website like crawling mistakes, plenty of 404 pages, malware issues, manual penalties, etc. In other words, Google communicates with webmasters through this tool. You also do not need to use most of the expensive SEO tools if you are using this tool.

- Google Analytics: It is a freemium web analytics service offered by Google. It provides the detailed statistics of the website traffic. It was introduced in November 2005 to track and report website traffic. It is a set of statistics and analytical tools that monitor the performance of your site. It tells you about your visitors and their activities, dwell-time metrics, search terms or incoming keywords and more.

- Open site explorer: This tool provides stats such as overall link counts and the count of domains that are linked to a URL including the anchor text distribution.

- Alexa: It is a ranking system that ranks the websites by web traffic data. The lower the Alexa rank, the more will be the traffic.

- Website grader: It is a free SEO tool that grades the websites on some key metrics such as security, mobile readiness, performance, and SEO.

- Google Keyword Planner: This tool of Google comes with many features. It gives you an estimation of traffic for your target keywords and suggests keywords with high traffic. Thus, you can shortlist the relevant keywords from the list of keywords offered by this tool.

- Plagiarism Checker: There are various tools to check the plagiarized content such as smallseotools.com, plagiarisma.net and more. Using these tools, you can avoid duplicate content and upload the unique or original content on your site.

The title tag should be between 66-70 characters as the Google generally displays the first 50to 60 characters of the title tag. So, if your title is under 60 characters, there are more chances that your title is displayed properly. Similarly, the Meta description tag should be between 160-170 characters as the search engines tend to truncate descriptions longer than 160 characters.

We should follow the following instructions to decrease the loading time of a website:

- Optimize images: You can optimize images and decrease the file size without affecting the quality of that image. You can use the external picture tools to resize your images such as the Photoshop, picresize.com and more. Furthermore, use fewer images (avoid them unless necessary).

- Use browser caching: Caching involves temporary storages of web pages that helps reduce bandwidth and improve performance. When a visitor visits your site, the cached version is presented that saves the server's time and loads pages faster. So, use browser caching to make the process easier and faster for your repeat visitors.

- Use content delivery network: It allows you to distribute your site across several servers in different geographical areas. When visitors request to visit your site, they will get the data from the server closest to them. The closer a server, the more quickly it loads.

- Avoid self-hosted videos: Video files are usually large, so if you upload them to your web pages it can increase their loading time. You can use other video services like Youtube, Vimeo, etc.

- Use CSS sprites to reduce HTTP request: It allows you to combine some images within a single image file by implementing CSS positioning of background. It helps you to save your server bandwidth and thus loading time of the webpage decreases gradually.

Webmaster tool should be preferred over Analytics tool because it includes almost all essential tools as well as some analytics data for the search engine optimization. But now due to the inclusion of webmaster data in Analytics, we would like to have access to Analytics.

A spider is a program used by search engines to index websites. It is also called a crawler or search engine robot. The main usage of search engine spiders is to index sites. It visits the websites and read their pages and creates entries for the search engine index. They act as data searching tools that visit websites to find new or updated content or pages and links.

Spiders do not monitor sites for unethical activities. They are called spiders as they visit many sites simultaneously, i.e., they keep spanning a large area of the web. All search engines use spiders to build and update their indexes.

If your website is banned by the search engines for using black hat practices and you have corrected the wrongdoings, you can apply for reinclusion. So, it is a process in which you ask the search engine to re-index your site that was penalized for using black hat SEO techniques. Google, Yahoo, and other search engines offer tools where webmasters can submit their site for reinclusion.

Robots.txt is a text file that gives instructions to the search engine crawlers about the indexing of a webpage, domain, directory or a file of a website. It is generally used to tell spiders about the pages that you don't want to be crawled. It is not mandatory for search engines, yet search engine spiders follow the instructions of the robots.txt.

The location of this file is very significant. It must be located in the main directory otherwise the spiders will not be able to find it as they do not search the whole site for a file named robots.txt. They only check the main directory for these files, and if they don't find the files in the main directory, they assume that the site does not have any robots.txt file and index the whole site.